Ever thought it was fascinating how the police forensic artists can draw up sketches based on seemingly nondescript verbal description? Now you can do that too, with the help of AI.

A team of researcher from the Chinese Academy of Science, China's state-owned research institute, and the City University of Hong Kong, has designed a neural network model that generate high-quality images of human portraits from sketches.

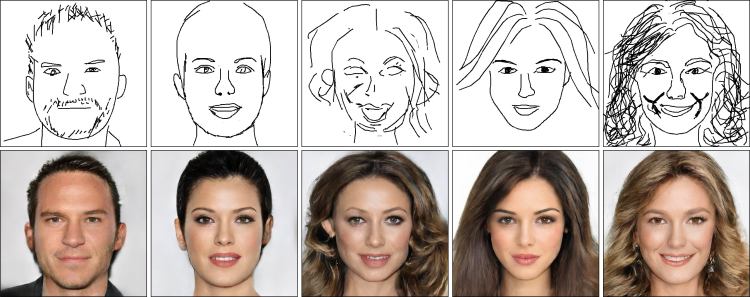

The deep learning-based sketch-to-image generation system, called DeepFaceDrawing can be used in various application including criminal investigation, character design, and educational training, according to research paper.

Current deep image-to-image translation techniques has already achieved fast generation of face images from sketches. However, the requirement of exiting solutions for the quality of sketches is high, it can only generate lifelike images of human face by inputting professional sketches, or even highly-detailed maps that contain distinct features of faces.

"Their problems are formulated more like reconstruction problem with input sketches as hard constraints," according to the paper, meaning that these constraints make it harder for non-professionals to utilize the technologies.

To address these issues, the team came up with a local-to-global approach that utilizes a massive database of facial features, such as eyes and noses, to fill in the blanks and assemble those features into generated portrait images, without the need for detailed visual description.

This novel approach handles face generation in a way that's similar to how the human brain works in related scenarios, by taking existing information about facial features into consideration and instinctively working out the most plausible end result.

The team tested the AI with 60 participants, most of whom had no professional training in drawing, showing each participant 22 sketches, each paired with three generated portraits using different methods including the team's. The DeepFaceDrawing result consistently proved to be of higher quality, or more "faithful" than the other two.

Aside from the fact that such technology could be used in police sketches, the researchers detailed other usages DeepFaceDrawing could be applied to, such as face morphing, videos of which are often found on social networks that morph the face of one celebrity into another's; or copying one's face onto another's head, similar to what the public would refer to as DeepFake.

Developed by a Chinese team, the AI system's implementation in non-state apparatus scenarios in China could face hurdles as the country's top legislative body raised concerns about technologies that can digitally swap and/or generate faces.

China's Civil Code, which was deliberated by the Standing Committee of the National's People's Congress during this year's Two Sessions, defined statutorily privacy and private information for the first time, and stipulated that individual portrait rights should not be violated by means of digital forgery.

The restrictions, announced in May 21, were the country's latest attempt to curb the spread of DeepFake face-swapping technologies that could potentially be used against popular figures such as politicians and celebrities.

DeepFake videos have been surging across the Chinese internet since last year. In September last year, face-swap app Zao owned by Chinese dating and live-streaming service company Momo quickly rocketed to the top of the free download chart on China’s App Store. The app enabled users to swap their faces into TV drama clips, making the DeepFake technology available for the public for the first time.

On Bilibili, a streaming website, a video that utilized similar technologies to swap celebrity Yang Mi's face on to that of Hong Kong movie star Althena Chu's from a 1994 drama series amassed more than 100 million views, raising concern about the negative impact of DeepFake on personal privacy and credibility.